In the Robot Learning Lab we have different equipment used to achieve our research goals. Below is a list of the equipment available in the Robot Learning Lab and if you would like to use any of this please do not hesitate to contact Dr. Matthew Howard:

Adding an IMU allows your application to refine its depth awareness in any situation where the camera moves.  This opens the door for rudimentary SLAM and tracking applications allowing better point-cloud alignment. It also allows improved environmental awareness for robotics and drones. The use of an IMU makes registration and calibration easier for handheld scanning system use cases and is also important in fields such as virtual/augmented reality and drones.

This opens the door for rudimentary SLAM and tracking applications allowing better point-cloud alignment. It also allows improved environmental awareness for robotics and drones. The use of an IMU makes registration and calibration easier for handheld scanning system use cases and is also important in fields such as virtual/augmented reality and drones.

UFACTORY uArm Studio offers a new way to program the robot arm without code. Then UFACTORY uArm could repeat intended paths in just 10 minutes.  UFACTORY uArm also has the Python SDK. A fully open-source software platform offers more flexible way to integrate the robot arm into your own system. Crafted from Aluminum and CNC cutting, UFACTORY uArm weights in less than 2.2kg, and it is the best choice for testing tasks.

UFACTORY uArm also has the Python SDK. A fully open-source software platform offers more flexible way to integrate the robot arm into your own system. Crafted from Aluminum and CNC cutting, UFACTORY uArm weights in less than 2.2kg, and it is the best choice for testing tasks.

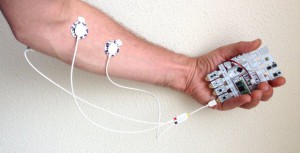

A high accuracy wireless biosignal acquisition system Intro_trigno_wirelesscapable  of recording 3D orientation/rotation/acceleration and muscle activity from small stick on sensors. This multiple sensor system allows for the simple collection of human motion data outside the laboratory, and has been used for recording arm activity for robotic control, and human gait during running for biomechanical studies.

of recording 3D orientation/rotation/acceleration and muscle activity from small stick on sensors. This multiple sensor system allows for the simple collection of human motion data outside the laboratory, and has been used for recording arm activity for robotic control, and human gait during running for biomechanical studies.

Sawyer is a collaborative robot made by Rethink Robotics. It is a fully ROS-compatible system, and features an integrated display and two integrated cameras (one at it's head, the other in it's forearm), allowing for rapid systems development. It's joints are both actively and passively compliant, and allow for full torque control making it ideal for human-robot interaction studies..

It is a fully ROS-compatible system, and features an integrated display and two integrated cameras (one at it's head, the other in it's forearm), allowing for rapid systems development. It's joints are both actively and passively compliant, and allow for full torque control making it ideal for human-robot interaction studies..

The AR10 hand is a 10 degree of freedom hand from ActiveRobots. The AR10 is controlled via a  USB interface, and features ROS integration allowing for individual joint control and visualisation in RViz, as well as full hand control through motion planning systems such as MoveIt.

USB interface, and features ROS integration allowing for individual joint control and visualisation in RViz, as well as full hand control through motion planning systems such as MoveIt.

6DOF (3D position, and 3D rotation) magnetic tracking with submillimetre accuracy.

6DOF (3D position, and 3D rotation) magnetic tracking with a larger workspace. used by the research group to get highly accuracy position data in robotic grasping tasks.

research group to get highly accuracy position data in robotic grasping tasks.

Combined sewing and embroidery machine, which is used in the lab to design soft sensing systems including circuitry embedded trousers and fabric electrodes. Designs can be made using CAD software, and loaded on the machine for automatic embroidery.

including circuitry embedded trousers and fabric electrodes. Designs can be made using CAD software, and loaded on the machine for automatic embroidery.

Silver Reed SK830 Electronic Knitting Machine:

A fine gauge, 250 needle, knitting machine used with CAD software. This is used in the lab to  design items of clothing that can incorporate in-built sensors for minimally invasive human motion monitoring.

design items of clothing that can incorporate in-built sensors for minimally invasive human motion monitoring.

Humanoid robot with ten position-controlled joints,programmable for walking/grasping tasks.  Used by the research group to explore control strategies, such as learning constraints for grasping.

Used by the research group to explore control strategies, such as learning constraints for grasping.

Biosignal acquisition system, used to collect muscle activity data (EMG) from humans. Using this system, the research group explores transferring this muscular data to low-cost prosthesis devices.

system, the research group explores transferring this muscular data to low-cost prosthesis devices.

The uArm Metal is an open sourced robot made by uFactory. It features 4 servos which provide  position feedback, allowing for both joint and task space control. The robot is back-driveable allowing for a 'learning mode', where the robot can record and play back demonstrated trajectories. The robot is controlled via USB and features a python library for control, as well as integration with ROS.

position feedback, allowing for both joint and task space control. The robot is back-driveable allowing for a 'learning mode', where the robot can record and play back demonstrated trajectories. The robot is controlled via USB and features a python library for control, as well as integration with ROS.

The EyeX is a novel USB3 eye-tracking sensor originally developed for the gaming industry. Once ![]() calibrated for a user, the unit can provide x-y coordinates of the user's current gaze location. Tobii provide an SDK for development with the Tobii; however currently the SDK is Windows only.

calibrated for a user, the unit can provide x-y coordinates of the user's current gaze location. Tobii provide an SDK for development with the Tobii; however currently the SDK is Windows only.