UG Projects 2023-24

The following project descriptions and references should provide most details. The tools (such as programming languages, simulation platforms) and datasets for project realisation depend on what are available.

For enquiry, please attend the following Q&A Teams meeting sessions.

| Q&A Session - Teams Meeting Link: |

| Teams Session, Tue 26/09/2023 09:00 - 09:30 am: click here to join |

Keywords:Classification, Computer Programming (Matlab, Python, TensorFlow), Computational Intelligence, Convotional Neural Network, Deep Learning, eXplainable Artificial Intelligence (XAI), Fuzzy Logic, Graph Neural Network, Generative Adversarial Network, Neural Network, Machine Learning, Population-Based Search Algorithms (Genetic Algorithm, Particle Swarm Optimisation, Q-learning, etc.), Reinforcement Learning, Support Vector Machine, Self-Organizing Map

Video Lectures: I have uploaded some lectures related to the above techniques to youtube: https://www.youtube.com/channel/UCNg2UL-3AEYXT98NRD5TCmw

Requirements: Hardworking, self-motivated, creative, good programming skills, willing to learn.

Remarks:

- All projects are open-ended. They are basically at UG level but can be taken into research level depending on problem formulation and the way approaching the problem.

- All project topics are challenging and students need to gain NEW knowledge for the projects.

- High Performence Computing (HPC) service can be used to speed up the learning process

- To creat an HPC account, raise a ticket in Rosalind ticket system https://helpdesk.rosalind.kcl.ac.uk/ (accessible from university network only) and complete the form after choosing “Request new account” in help topics.

- Further information about HPC can be found in https://user-wiki.rosalind.kcl.ac.uk/doku.php?id=hpc_quick_start_guide

- I categorise the project outcomes into the following three levels (basic, merit and distinction) according to 1) the challenging level of the problem, 2) scientific and academic contributions (insights, ideas, knowledge, novelty), 3) the breadth and depth (coverage, quality, level of sophistication) of design, methodology, and results. The level of breadth and depth would give a sense of the contribution made to the project.

- Encourage to publish the contributions and achievements in a journal paper.

- Technical papers can be downloaded from IEEE Explore (https://ieeexplore.ieee.org/Xplore/home.jsp) and Science Direct (https://www.sciencedirect.com/) using your King's login details (through institutional login).

[HKL01] Explainable Machine Learning Framework for Alzheimer’s Disease Detection using Multi-modal Data

Alzheimer's disease (AD) is a progressive neurologic disorder that affects more than 850,000 people in the UK, costing the UK economy around £26bn per year. AD symptomatology is multimodal and correlated with cognitive scores, symptoms, demographics, etc. Reliable diagnoses can be confirmed by complementary information among the modalities. Consequently, AD detection methods should not only rely on measurements of a unique domain. However, it is difficult for experts to recognize all shifts in all modalities for AD patients, leading to misdiagnosis.

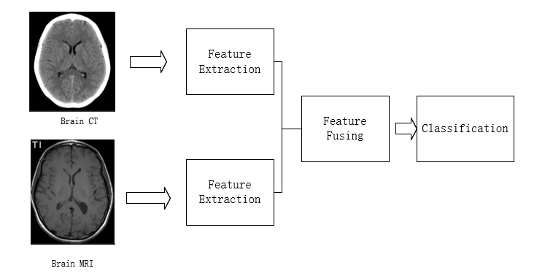

Therefore this projecr focuses on using a multimodal data approach (see figure below). By integrating different modalities of image, this project aims to develop a deep learning framework for AD detection and make the detection framework more explainable. There are two basic objectives: 1) The first objective is to develop a deep learning framework for AD detection based on feature fusion techniques to extract informative patterns from different modalities of image samples. 2) The second objective is to implement the existing explanation tools (such as SHAP) to demonstrate, e.g., which features make more contribution to the AD detection framework.

Reference Datasets:

- OASIS Brains - Open Access Series of Imaging Studies (oasis-brains.org)

- OASIS Alzheimer's Detection - Training | Kaggle

References:

[1] Qiu S, Miller M I, Joshi P S, et al. Multimodal deep learning for Alzheimer’s disease dementia assessment[J]. Nature communications, 2022, 13(1): 3404.

[2] Golovanevsky M, Eickhoff C, Singh R. Multimodal attention-based deep learning for Alzheimer’s disease diagnosis[J]. Journal of the American Medical Informatics Association, 2022, 29(12): 2014-2022.

[3] Zu C, Jie B, Liu M, et al. Label-aligned multi-task feature learning for multimodal classification of Alzheimer’s disease and mild cognitive impairment[J]. Brain imaging and behavior, 2016, 10: 1148-1159.

[4] Tăuţan A M, Ionescu B, Santarnecchi E. Artificial intelligence in neurodegenerative diseases: A review of available tools with a focus on machine learning techniques[J]. Artificial Intelligence in Medicine, 2021, 117: 102081.

[5] Song J, Zheng J, Li P, et al. An effective multimodal image fusion method using MRI and PET for Alzheimer's disease diagnosis[J]. Frontiers in digital health, 2021, 3: 637386.

[6] El-Sappagh S, Alonso J M, Islam S M R, et al. A multilayer multimodal detection and prediction model based on explainable artificial intelligence for Alzheimer’s disease[J]. Scientific reports, 2021, 11(1): 2660.

[7] Yu L, Xiang W, Fang J, et al. A novel explainable neural network for Alzheimer’s disease diagnosis[J]. Pattern Recognition, 2022, 131: 108876.

[8] Duamwan L M, Bird J J. Explainable AI for Medical Image Processing: A Study on MRI in Alzheimer’s Disease[C]//Proceedings of the 16th International Conference on PErvasive Technologies Related to Assistive Environments. 2023: 480-484.

[9] Van der Velden B H M, Kuijf H J, Gilhuijs K G A, et al. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis[J]. Medical Image Analysis, 2022, 79: 102470.

ca

Reference Code:

Before starting the project,

1) Apply for HPC at our university

2) Set up the deep learning environment and familiar with the deep learning platforms including Pytorch and Tensorflow

3) Learn fundamental knowledge of convolution neural networks and explanation mechanism

[HKL02] Developing an Explainable Machine Learning Model for CO2 Prediction in Neonatal Care to Enhance Respiratory Support

In the field of medicine, closed-loop systems utilize monitored data to effectively manage dynamic processes [1]. Within neonatal care, closed-loop oxygen control systems are employed to safely maintain optimal oxygen levels, thereby reducing the risk of complications [2]. Premature infants often require invasive mechanical ventilation to support their respiration [3]. The goal of assisted ventilation is to carefully regulate both oxygen and CO2 levels to prevent respiratory failure. CO2 elimination hinges on minute ventilation, a combination of tidal volume and respiratory rate, and excessive volume delivery can potentially damage the delicate lung tissue [3]. While monitoring CO2 levels is of utmost importance, the development of closed-loop CO2 control systems for neonates presents challenges due to unique anatomical factors [5]. Recent innovations in continuous tidal capnography offer accurate measurements and the ability to predict CO2 values [6]. Maintaining proper CO2 levels is particularly critical for premature infants as extreme deviations can lead to severe complications [7].

A dataset including 40 infants with approximately 12,000 neonatal respiratory data points will be provided (by Prof. Theodore Dassios, Department of Women & Children's Health, King's College London). This dataset correspond to the activity of the previous 12 months with a recruitment rate of 50% based on availability of medical and nursing notes. This project aims to create an explainable machine learning model to predict CO2 levels using inspiratory pressures and data from the dataset.

Creating an explainable artificial intelligence (XAI) and machine learning model for CO2 prediction may involve the following steps.

1. Data preprocessing

- Obtain a dataset containing relevant features, including inspiratory pressures and corresponding CO2 levels.

- Ensure data quality by addressing missing values, outliers, and noise.

- Split the dataset into training, validation, and test sets to evaluate model performance (see information in later step).

2. Feature Selection:

- Identify and select the most important features that influence CO2 levels, keeping interpretability in mind.

- Create additional features if necessary, and perform feature engineering based on domain knowledge.

3. Model Selection:

- Use deep-structured network model, e.g., convolutional neural networks (CNN), long-short-term memory (LSTM) network for prediction

- Choose a machine learning algorithm that allows for interpretability. Some options include:

- Linear Regression

- Decision Trees

- Random Forest

- Gradient Boosting (e.g., XGBoost, LightGBM)

- Alternatively, consider using models designed for XAI, such as LIME (Local Interpretable Model-Agnostic Explanations) or SHAP (SHapley Additive exPlanations).

4. Data Splitting:

- Split the data into training, validation, and test sets using a standard ratio (e.g., 70% for training, 15% for validation, 15% for testing).

5. Model Training:

- Train the chosen model on the training data.

- Use appropriate evaluation metrics for regression tasks, e.g., Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), or R-squared (R2).

6. Interpretability Techniques:

- Implement interpretability techniques alongside your model to make predictions more understandable. These techniques may include:

- Feature importance analysis to identify influential features.

- Partial dependence plots (PDPs) to visualize the effect of individual features on predictions.

- SHAP (SHapley Additive exPlanations) values to explain the contribution of each feature to a prediction.

- LIME (Local Interpretable Model-Agnostic Explanations) for creating locally faithful explanations.

- Decision tree visualization to show the decision-making process of tree-based models.

7. Model Evaluation and Validation:

- Assess the model's performance on the validation set.

- Tune hyperparameters and adjust the model architecture if necessary to improve performance.

- Interpret the model's predictions using the implemented techniques.

8. Model Testing:

- Validate the models on the test dataset to estimate its performance accurately.

- Calculate and report relevant evaluation metrics while providing interpretability insights.

References

1. Pauldine R, Beck G, Salinas J, Kaczka DW. Closed-loop strategies for patient care systems. J Trauma. 2008 Apr;64(4 Suppl):S289-94. Epub 2008/04/11.10.1097/TA.0b013e31816bce43

2. Sturrock S, Williams E, Dassios T, Greenough A. Closed loop automated oxygen control in neonates - a review. Acta paediatrica. 2019 Nov 12.10.1111/apa.15089

3. Dassios T, Williams EE, Hickey A, Greenough A. Duration of mechanical ventilation and prediction of bronchopulmonary dysplasia and home oxygen in extremely preterm infants. Acta paediatrica. 2021 Feb 8. Epub 2021/02/09.10.1111/apa.15801

4. Arnal JM, Garnero A, Novonti D, Demory D, Ducros L, Berric A, et al. Feasibility study on full closed-loop control ventilation (IntelliVent-ASV) in ICU patients with acute respiratory failure: a prospective observational comparative study. Critical care. 2013 Sep 11;17(5):R196. Epub 2013/09/13.10.1186/cc12890

5. Schmalisch G. Current methodological and technical limitations of time and volumetric capnography in newborns. Biomedical engineering online. 2016 Aug 30;15(1):104.10.1186/s12938-016-0228-4

6. Williams E, Dassios T, Greenough A. Assessment of sidestream end-tidal capnography in ventilated infants on the neonatal unit. Under review. 2020.

7. Tamura K, Williams EE, Dassios T, Pahuja A, Hunt KA, Murthy V, et al. End-tidal carbon dioxide levels during resuscitation and carbon dioxide levels in the immediate neonatal period and intraventricular haemorrhage. European journal of pediatrics. 2020 Apr;179(4):555-9. Epub 2019/12/19.10.1007/s00431-019-03543-0

8. Wright CM, Booth IW, Buckler JM, Cameron N, Cole TJ, Healy MJ, et al. Growth reference charts for use in the United Kingdom. Archives of disease in childhood. 2002 Jan;86(1):11-4. Epub 2002/01/25.10.1136/adc.86.1.11

9. Jobe AH, Bancalari E. Bronchopulmonary dysplasia. American journal of respiratory and critical care medicine. 2001 Jun;163(7):1723-9. Epub 2001/06/13.10.1164/ajrccm.163.7.2011060

10. Australia B-I, United Kingdom Collaborative G, Tarnow-Mordi W, Stenson B, Kirby A, Juszczak E, et al. Outcomes of Two Trials of Oxygen-Saturation Targets in Preterm Infants. The New England journal of medicine. 2016 Feb 25;374(8):749-60. Epub 2016/02/11.10.1056/NEJMoa1514212

11. Rodgers A, Singh C. Specialist neonatal respiratory care for babies born preterm (NICE guideline 124): a review. Archives of disease in childhood Education and practice edition. 2020 Dec;105(6):355-7. Epub 2020/04/30.10.1136/archdischild-2019-317461

[HKL03] Control of Articulated Robots using Reinforcement-Learning Graph-Neural-Network-Based Techniques

Reinforcement Learning (RL) algorithm is one of the machine learning algorithms which generates optimal policy by maximising the expectation of accumulated rewards in a long-term consideration even without knowing the dynamics of the environment. Graph Neural Network (GNN) based on graph theory (describing a an object as a graph, i.e., a connected graph consists of nodes and edges and ) will integrate information from neighbour nodes for mining hidden features among nodes. The aim of this project is to design a RL-GNN based controller for articulated/spider-like robots for realising body moving and accomplish some simple objects such as position control. Performance of the controller should be evaluated by both designing reward functions with respect to objects and training rates comparing with other ANN training methods.

The project can be implemented (but not only restrict to) through following optional languages and platforms:

- OpenAI Gym: Possess several existing models from training, relatively user-friendly.

- ROS2: An open-source robotics middleware suite, consists plenty of available plugins & tools. Relatively difficult to start but more powerful.

- MATLAB/Simulink: A proprietary multi-paradigm programming language and numeric computing environment, with plenty of downloadable powerful modules.

- Python: An interpreted high-level general-purpose programming language.

- TensorFlow: A free and open-source software library for machine learning.

Demonstration:

References:

- Graph Representation Learning (William L Hamilton)

- Reinforcement Learning- An Introduction

- NerveNet- Learning Structured Policy with Graph Neural Networks

Resources:

- TensorFlow: https://www.tensorflow.org/

- OpenAI Gym:

- Introduction: https://gym.openai.com/envs/Ant-v2/

- Source Code: https://github.com/openai/gym/blob/master/gym/envs/mujoco/ant.py

- ROS2 (Recommended working on Ubuntu Linux):

- Spider Robot Source Code: https://github.com/erlerobot/ros2_spider_maestro_controller

- GNN: Plenty of source code can be found in GitHub, mostly based on Python.

Skills: Robotics, control, programming, machine learning

[HKL04] Fall/Behaviour Detection/Prediction/Classification using

Graph Neural Networks

The idea of the project is to create a graph neural networks and machine-learning models for the detection/prediction/classification of falls or behaviours.

References:

- Graph Representation Learning (William L Hamilton)

- Martínez-Villaseñor, L., Ponce, H., Brieva, J., Moya-Albor, E., Núñez-Martínez, J. and Peñafort-Asturiano, C., 2019. UP-fall detection dataset: A multimodal approach. Sensors, 19(9), p.1988.

- Hemmatpour, M., Ferrero, R., Montrucchio, B. and Rebaudengo, M., 2019. A review on fall prediction and prevention system for personal devices: evaluation and experimental results. Advances in Human-Computer Interaction, 2019.

- Tsai, T.H. and Hsu, C.W., 2019. Implementation of fall detection system based on 3D skeleton for deep learning technique. IEEE Access, 7, pp.153049-153059.

- Cai, X., Liu, X., An, M. and Han, G., 2021. Vision-Based Fall Detection Using Dense Block With Multi-Channel Convolutional Fusion Strategy. IEEE Access, 9, pp.18318-18325.

- Keskes, O. and Noumeir, R., 2021. Vision-based fall detection using ST-GCN. IEEE Access, 9, pp.28224-28236.

- Chami, I., Ying, Z., Ré, C. and Leskovec, J., 2019. Hyperbolic graph convolutional neural networks. Advances in neural information processing systems, 32, pp.4868-4879.

- FallFree: Multiple Fall Scenario Dataset of Cane Users for Monitoring Applications Using Kinect, https://ieeexplore.ieee.org/document/8334766

- Realtime Multi-Person Pose Estimation, https://github.com/ZheC/Realtime_Multi-Person_Pose_Estimation

- CMU Perceptual Computing Lab/openposePublic, https://github.com/CMU-Perceptual-Computing-Lab/openpose

Datasets

- Fall Datasets

- TST FALL DETECTION DATASET V2, https://ieee-dataport.org/documents/tst-fall-detection-dataset-v2#files

-

FALL-UP DATASET, https://sites.google.com/up.edu.mx/har-up/

- CMU Panoptic Dataset, http://domedb.perception.cs.cmu.edu/

- The FARSEEING real-world fall repository: a large-scale collaborative database to collect and share sensor signals from real-world falls, http://farseeingresearch.eu/the-farseeing-real-world-fall-repository-a-large-scale-collaborative-database-to-collect-and-share-sensor-signals-from-real-world-falls/

- KFall: A Comprehensive Motion Dataset to Detect Pre-impact Fall for the Elderly based on Wearable Inertial Sensors, https://sites.google.com/view/kfalldataset

- Fall Dataset-Related Papers

- Analysis of Public Datasets for Wearable Fall Detection Systems, https://www.mdpi.com/1424-8220/17/7/1513

- A Large-Scale Open Motion Dataset (KFall) and Benchmark Algorithms for Detecting Pre-impact Fall of the Elderly Using Wearable Inertial Sensors, https://www.frontiersin.org/articles/10.3389/fnagi.2021.692865/full

Skills: Programming, machine learning

[HKL05] Classification of Cancer Cells using Explainable Machine Learning Techniques

Machine-learning-based classifiers will be developed for detecting and classifier cancer image samples. Advanced deep-learning structured networks (e.g., convolutional neural network, long-short-term-memory network, transformer, graph neural network, generative adversarial network, deep fuzzy network) combining with the state-of-the-art techniques (machine attention, data augmentation, fuzzy logic) will be employed to develop classifiers for detection and classification purpose. The eXplainable Artificial Intelligence (XAI) techniques (e.g., model-agnostic techniques: Local Interpretable Model-agnostic Explanations (LIME), SHapley Additive exPlanations (SHAP), Layer-Wise Relevance Propagation (LPR)) will be employed to explain the decision/actions made by the machine learning models. An explaination interface will be created to present the explainable report to the user.

Publically available datasets will be used to support this project.

1. Tissue Image Analytics (TIA) Centre, (Dataset: https://warwick.ac.uk/fac/cross_fac/tia/data/pannuke, Papers: PanNuke Dataset Extension, Insights and Baselines, https://link.springer.com/chapter/10.1007/978-3-030-23937-4_2)

Useful Links:

-

CS231n: Convolutional Neural Networks for Visual Recognition

-

A Beginner's Guide To Understanding Convolutional Neural Networks

- "Why Should I Trust You?": Explaining the Predictions of Any Classifier, https://arxiv.org/abs/1602.04938

- https://github.com/marcotcr/lime

- A Unified Approach to Interpreting Model Predictions, https://arxiv.org/abs/1705.07874

- https://github.com/slundberg/shap

- Interpretable Machine Learning A Guide for Making Black Box Models Explainable, https://christophm.github.io/interpretable-ml-book/

- A Survey on Explainable Artificial Intelligence (XAI): Toward Medical XAI, https://ieeexplore.ieee.org/document/9233366

- InDepth: Layer-Wise Relevance Propagation, https://towardsdatascience.com/indepth-layer-wise-relevance-propagation-340f95deb1ea

- Layer-Wise Relevance Propagation: An Overview, https://link.springer.com/chapter/10.1007/978-3-030-28954-6_10 (The book can be downloaded through Institional Login (Access via your institution, choose King's College London))

- Metrics for Explainable AI: Challenges and Prospects, https://arxiv.org/abs/1812.04608

- Evaluating the Quality of Machine Learning Explanations: A Survey on Methods and Metrics, https://www.mdpi.com/2079-9292/10/5/593/pdf

- Explaining Artificial Intelligence Demos, https://lrpserver.hhi.fraunhofer.de/

- Explaining Deep Learning Models for Structured Data using Layer-Wise Relevance Propagation, https://arxiv.org/abs/2011.13429

- Layer-Wise Relevance Propagation for Explaining Deep Neural Network Decisions in MRI-Based Alzheimer's Disease Classification, https://www.frontiersin.org/articles/10.3389/fnagi.2019.00194/full

- Explaining Deep Learning Models Through Rule-Based Approximation and Visualization, https://ieeexplore.ieee.org/document/9107404

Skills: Programming, machine learning

[HKL06] Self Driving with Reinforcement Learning

Self driving is a concept that has risen widely attention in recent years. An auto driving vehicle would be able to grasp the driving skills and learn the traffic rules using tons of data from different sensors. As to make vehicles make proper decisions during driving, the training process turns out to be crucial. Reinforcement learning as a branch of machine learning algorithms could figure out optimal policie by interacting with environment by maximising the expectation of accumulated reward in long term has been widely used and proved to be an effective technique.

This project aims to apply reinforcement learning algorithms such as Q-learning algorithm, DQN (Deep Q-Network), DDPG (Deep Deterministic Policy Gradients) etc., to train vehicles to learn drive autonomously.

The eXplainable Artificial Intelligence (XAI) techniques (e.g., model-agnostic techniques: Local Interpretable Model-agnostic Explanations (LIME), SHapley Additive exPlanations (SHAP), Layer-Wise Relevance Propagation (LPR)) will be employed to explain the decision/actions made by the machine learning models. An explaination interface will be created to present the explainable report to the user.

Following are some papers and tutorials as reference:

- Learning to Drive in a Day: https://arxiv.org/pdf/1807.00412.pdf

- Deep Reinforcement Learning for Driving Policy:

- Self-supervised Deep Reinforcement Learning with Generalized Computation Graphs for Robot Navigation: https://arxiv.org/abs/1709.10489

- MIT 6.S094: Deep Reinforcement Learning for Motion Planning: https://www.youtube.com/watch?v=QDzM8r3WgBw

Useful Links:

-

CS231n: Convolutional Neural Networks for Visual Recognition

-

A Beginner's Guide To Understanding Convolutional Neural Networks

- "Why Should I Trust You?": Explaining the Predictions of Any Classifier, https://arxiv.org/abs/1602.04938

- https://github.com/marcotcr/lime

- A Unified Approach to Interpreting Model Predictions, https://arxiv.org/abs/1705.07874

- https://github.com/slundberg/shap

- Interpretable Machine Learning A Guide for Making Black Box Models Explainable, https://christophm.github.io/interpretable-ml-book/

- A Survey on Explainable Artificial Intelligence (XAI): Toward Medical XAI, https://ieeexplore.ieee.org/document/9233366

- InDepth: Layer-Wise Relevance Propagation, https://towardsdatascience.com/indepth-layer-wise-relevance-propagation-340f95deb1ea

- Layer-Wise Relevance Propagation: An Overview, https://link.springer.com/chapter/10.1007/978-3-030-28954-6_10 (The book can be downloaded through Institional Login (Access via your institution, choose King's College London))

- Metrics for Explainable AI: Challenges and Prospects, https://arxiv.org/abs/1812.04608

- Evaluating the Quality of Machine Learning Explanations: A Survey on Methods and Metrics, https://www.mdpi.com/2079-9292/10/5/593/pdf

- Explaining Artificial Intelligence Demos, https://lrpserver.hhi.fraunhofer.de/

- Explaining Deep Learning Models for Structured Data using Layer-Wise Relevance Propagation, https://arxiv.org/abs/2011.13429

- Layer-Wise Relevance Propagation for Explaining Deep Neural Network Decisions in MRI-Based Alzheimer's Disease Classification, https://www.frontiersin.org/articles/10.3389/fnagi.2019.00194/full

- Explaining Deep Learning Models Through Rule-Based Approximation and Visualization, https://ieeexplore.ieee.org/document/9107404

Here are some applications on Youtube:

- Deep Learning Cars:

- Reinforcement Learning for Autonomous Driving Obstacle Avoidance using LIDAR

- Self Driving Digital Car using Python CNN Reinforcement learning

- Self-supervised Deep Reinforcement Learning with Generalized Computation Graphs for Robot Navigation

Skills: Programming, machine learning, robotics