MSc Projects 2023-24

The following project descriptions and references should provide most details. The tools (such as programming languages, simulation platforms) and datasets for project realisation depend on what are available.

For enquiry, please attend the following Q&A Teams meeting sessions.

| Q&A Session - Teams Meeting |

| Click here to join, Wednesday, 8th Novenber 2023, 11:30 am - 12:00 noon |

Keywords:Classification, Computer Programming (Matlab, Python, TensorFlow), Computational Intelligence, Convotional Neural Network, Deep Learning, eXplainable Artificial Intelligence (XAI), Fuzzy Logic, Graph Neural Network, Generative Adversarial Network, Neural Network, Machine Learning, Population-Based Search Algorithms (Genetic Algorithm, Particle Swarm Optimisation, Q-learning, etc.), Reinforcement Learning, Support Vector Machine, Self-Organizing Map

Video Lectures: I have uploaded some lectures related to the above techniques to youtube: https://www.youtube.com/channel/UCNg2UL-3AEYXT98NRD5TCmw

Requirements: Hardworking, self-motivated, creative, good programming skills, willing to learn.

Remarks:

- All projects are open-ended. They are basically at MSc level but can be taken into research level depending on problem formulation and the way approaching the problem.

- All project topics are challenging and students need to gain NEW knowledge for the projects.

- High Performence Computing (HPC) service can be used to speed up the learning process

- To creat an HPC account, raise a ticket in Rosalind ticket system https://helpdesk.rosalind.kcl.ac.uk/ (accessible from university network only) and complete the form after choosing “Request new account” in help topics.

- Further information about HPC can be found in https://user-wiki.rosalind.kcl.ac.uk/doku.php?id=hpc_quick_start_guide

- I categorise the project outcomes into the following three levels (basic, merit and distinction) according to 1) the challenging level of the problem, 2) scientific and academic contributions (insights, ideas, knowledge, novelty), 3) the breadth and depth (coverage, quality, level of sophistication) of design, methodology, and results. The level of breadth and depth would give a sense of the contribution made to the project.

- Encourage to publish the contributions and achievements in a journal paper.

- Technical papers can be downloaded from IEEE Explore (https://ieeexplore.ieee.org/Xplore/home.jsp) and Science Direct (https://www.sciencedirect.com/) using your King's login details (through institutional login).

[HKL01] Control of Articulated Robots using Reinforcement Learning and Graph-Neural Networks

Reinforcement Learning (RL) algorithm is one of the machine learning algorithms which generates optimal policy by maximising the expectation of accumulated rewards in a long-term consideration even without knowing the dynamics of the environment. Graph Neural Network (GNN) based on graph theory (describing a an object as a graph, i.e., a connected graph consists of nodes and edges and ) will integrate information from neighbour nodes for mining hidden features among nodes. The aim of this project is to design a RL-GNN based controller for articulated/spider-like robots for realising body moving and accomplish some simple objects such as position control. Performance of the controller should be evaluated by both designing reward functions with respect to objects and training rates comparing with other ANN training methods.

The project can be implemented (but not only restrict to) through following optional languages and platforms:

- OpenAI Gym: Possess several existing models from training, relatively user-friendly.

- ROS2: An open-source robotics middleware suite, consists plenty of available plugins & tools. Relatively difficult to start but more powerful.

- MATLAB/Simulink: A proprietary multi-paradigm programming language and numeric computing environment, with plenty of downloadable powerful modules.

- Python: An interpreted high-level general-purpose programming language.

- TensorFlow: A free and open-source software library for machine learning.

Demonstration:

References:

- Graph Representation Learning (William L Hamilton)

- Reinforcement Learning- An Introduction

- NerveNet- Learning Structured Policy with Graph Neural Networks

Resources:

- TensorFlow: https://www.tensorflow.org/

- OpenAI Gym:

- Introduction: https://gym.openai.com/envs/Ant-v2/

- Source Code: https://github.com/openai/gym/blob/master/gym/envs/mujoco/ant.py

- ROS2 (Recommended working on Ubuntu Linux):

- Spider Robot Source Code: https://github.com/erlerobot/ros2_spider_maestro_controller

- Robotschool, https://openai.com/research/roboschool

- GNN: Plenty of source code can be found in GitHub, mostly based on Python.

- Understanding Convolutions on Graphs, https://distill.pub/2021/understanding-gnns

- A Gentle Introduction to Graph Neural Networks, https://distill.pub/2021/gnn-intro/

- Graph Convolutional Networks, https://tkipf.github.io/graph-convolutional-networks/

- Graph Attention Networks: Self-Attention Explained, https://towardsdatascience.com/graph-attention-networks-in-python-975736ac5c0c

Skills: Robotics, control, programming, machine learning

[HKL02] Detection/Prediction/Classification of Falls or Behaviours using Graph Neural Networks and Explainable AI Techniques

The detection, prediction, and classification of falls and human behaviours are significant across various domains. Fall detection is vital for ensuring the safety of the elderly and workers in high-risk environments like construction sites and healthcare facilities. The capacity to predict falls or deviations from normal behavior plays a pivotal role in preventing accidents and injuries that form a key component of proactive safety measures. Moreover, behavior classification yields valuable insights into individuals' actions that serve applications in healthcare monitoring, security, surveillance and user experience analysis. Advanced technologies, e.g., machine learning, explainable artificial intelligence (XAI), play a leading role in making our world safer and improving healthcare and overall quality of life.

In this project, the integration of XAI is of great significance for the detection, prediction, and classification of falls and human behaviours. XAI plays a pivotal role in providing transparency and clarity in the decision-making process of AI systems. This transparency is crucial for building trust and understanding why specific outcomes are reached. In the context of fall detection, XAI ensures that the basis for AI's decisions is clear, enabling appropriate and timely interventions to prevent accidents. Moreover, in behavior prediction and classification, XAI enables us to interpret the underlying rationale behind AI-generated insights. This level of transparency is of paramount importance, particularly in healthcare, safety, and various other applications, to allow us to make more informed, accurate, and reliable decisions.

This project aims to use advanced Graph Neural Networks (GNNs) and XAI to improve fall or behavior detection, prediction and classification. We will create a explanable GNN-based system that can quickly and accurately detect falls, predict possible accidents (and understand different human behaviors). We will make our systems easy to understand and trust by using XAI.

Remark: In this project, we will consider datasets related to falls, human behaviors, or both, depending on the specific requirements and objectives of our analysis.

References:

- Graph Representation Learning (William L Hamilton)

- Martínez-Villaseñor, L., Ponce, H., Brieva, J., Moya-Albor, E., Núñez-Martínez, J. and Peñafort-Asturiano, C., 2019. UP-fall detection dataset: A multimodal approach. Sensors, 19(9), p.1988.

- Hemmatpour, M., Ferrero, R., Montrucchio, B. and Rebaudengo, M., 2019. A review on fall prediction and prevention system for personal devices: evaluation and experimental results. Advances in Human-Computer Interaction, 2019.

- Tsai, T.H. and Hsu, C.W., 2019. Implementation of fall detection system based on 3D skeleton for deep learning technique. IEEE Access, 7, pp.153049-153059.

- Cai, X., Liu, X., An, M. and Han, G., 2021. Vision-Based Fall Detection Using Dense Block With Multi-Channel Convolutional Fusion Strategy. IEEE Access, 9, pp.18318-18325.

- Keskes, O. and Noumeir, R., 2021. Vision-based fall detection using ST-GCN. IEEE Access, 9, pp.28224-28236.

- Chami, I., Ying, Z., Ré, C. and Leskovec, J., 2019. Hyperbolic graph convolutional neural networks. Advances in neural information processing systems, 32, pp.4868-4879.

- FallFree: Multiple Fall Scenario Dataset of Cane Users for Monitoring Applications Using Kinect, https://ieeexplore.ieee.org/document/8334766

- Realtime Multi-Person Pose Estimation, https://github.com/ZheC/Realtime_Multi-Person_Pose_Estimation

- CMU Perceptual Computing Lab/openposePublic, https://github.com/CMU-Perceptual-Computing-Lab/openpose

- Understanding Convolutions on Graphs, https://distill.pub/2021/understanding-gnns

- A Gentle Introduction to Graph Neural Networks, https://distill.pub/2021/gnn-intro/

- Graph Convolutional Networks, https://tkipf.github.io/graph-convolutional-networks/

- Graph Attention Networks: Self-Attention Explained, https://towardsdatascience.com/graph-attention-networks-in-python-975736ac5c0c

Datasets

- Fall Datasets

- TST FALL DETECTION DATASET V2, https://ieee-dataport.org/documents/tst-fall-detection-dataset-v2#files

-

FALL-UP DATASET, https://sites.google.com/up.edu.mx/har-up/

- CMU Panoptic Dataset, http://domedb.perception.cs.cmu.edu/

- The FARSEEING real-world fall repository: a large-scale collaborative database to collect and share sensor signals from real-world falls, http://farseeingresearch.eu/the-farseeing-real-world-fall-repository-a-large-scale-collaborative-database-to-collect-and-share-sensor-signals-from-real-world-falls/

- KFall: A Comprehensive Motion Dataset to Detect Pre-impact Fall for the Elderly based on Wearable Inertial Sensors, https://sites.google.com/view/kfalldataset

- Fall Dataset-Related Papers

- Analysis of Public Datasets for Wearable Fall Detection Systems, https://www.mdpi.com/1424-8220/17/7/1513

- A Large-Scale Open Motion Dataset (KFall) and Benchmark Algorithms for Detecting Pre-impact Fall of the Elderly Using Wearable Inertial Sensors, https://www.frontiersin.org/articles/10.3389/fnagi.2021.692865/full

Skills: Data analysis, programming, machine learning

[HKL03] Cancer Cell Classification with Explainable Machine Learning Methods

Machine-learning-based classifiers will be developed for detecting and classifier cancer image samples. Advanced deep-learning structured networks (e.g., convolutional neural network, long-short-term-memory network, transformer, graph neural network, generative adversarial network, deep fuzzy network) combining with the state-of-the-art techniques (machine attention, data augmentation, fuzzy logic) will be employed to develop classifiers for detection and classification purpose. The eXplainable Artificial Intelligence (XAI) techniques (e.g., model-agnostic techniques: Local Interpretable Model-agnostic Explanations (LIME), SHapley Additive exPlanations (SHAP), Layer-Wise Relevance Propagation (LPR)) will be employed to explain the decision/actions made by the machine learning models. An explaination interface will be created to present the explainable report to the user.

Publically available datasets will be used to support this project.

1. Tissue Image Analytics (TIA) Centre, (Dataset: https://warwick.ac.uk/fac/cross_fac/tia/data/pannuke, Papers: PanNuke Dataset Extension, Insights and Baselines, https://link.springer.com/chapter/10.1007/978-3-030-23937-4_2)

Useful Links:

-

CS231n: Convolutional Neural Networks for Visual Recognition

-

A Beginner's Guide To Understanding Convolutional Neural Networks

- "Why Should I Trust You?": Explaining the Predictions of Any Classifier, https://arxiv.org/abs/1602.04938

- https://github.com/marcotcr/lime

- A Unified Approach to Interpreting Model Predictions, https://arxiv.org/abs/1705.07874

- https://github.com/slundberg/shap

- Interpretable Machine Learning A Guide for Making Black Box Models Explainable, https://christophm.github.io/interpretable-ml-book/

- A Survey on Explainable Artificial Intelligence (XAI): Toward Medical XAI, https://ieeexplore.ieee.org/document/9233366

- InDepth: Layer-Wise Relevance Propagation, https://towardsdatascience.com/indepth-layer-wise-relevance-propagation-340f95deb1ea

- Layer-Wise Relevance Propagation: An Overview, https://link.springer.com/chapter/10.1007/978-3-030-28954-6_10 (The book can be downloaded through Institional Login (Access via your institution, choose King's College London))

- Metrics for Explainable AI: Challenges and Prospects, https://arxiv.org/abs/1812.04608

- Evaluating the Quality of Machine Learning Explanations: A Survey on Methods and Metrics, https://www.mdpi.com/2079-9292/10/5/593/pdf

- Explaining Artificial Intelligence Demos, https://lrpserver.hhi.fraunhofer.de/

- Explaining Deep Learning Models for Structured Data using Layer-Wise Relevance Propagation, https://arxiv.org/abs/2011.13429

- Layer-Wise Relevance Propagation for Explaining Deep Neural Network Decisions in MRI-Based Alzheimer's Disease Classification, https://www.frontiersin.org/articles/10.3389/fnagi.2019.00194/full

- Explaining Deep Learning Models Through Rule-Based Approximation and Visualization, https://ieeexplore.ieee.org/document/9107404

Skills: Data analysis, programming, machine learning

[HKL04] Interpretable Machine Learning for Early Detection and Explanation of Lower Back Pain Risk

This project wll be co-supervised by Dr. Ernest Kamavuako.

Lower Back Pain (LBP) is a prevalent health issue affecting people of all ages, causing discomfort, disability, and economic burden. It is a leading cause of work absenteeism and poses a significant challenge to healthcare systems worldwide. Accurate prediction of LBP can help in early intervention and personalised treatment that ultimately improves the quality of life for affected individuals.

This project aims to develop a data-driven approach for predicting LBP using explainable machine learning algorithms and models. The goal is to create a reliable predictive tool that can assist healthcare professionals in identifying individuals at risk of developing LBP and provide insights into the factors contributing to their condition.

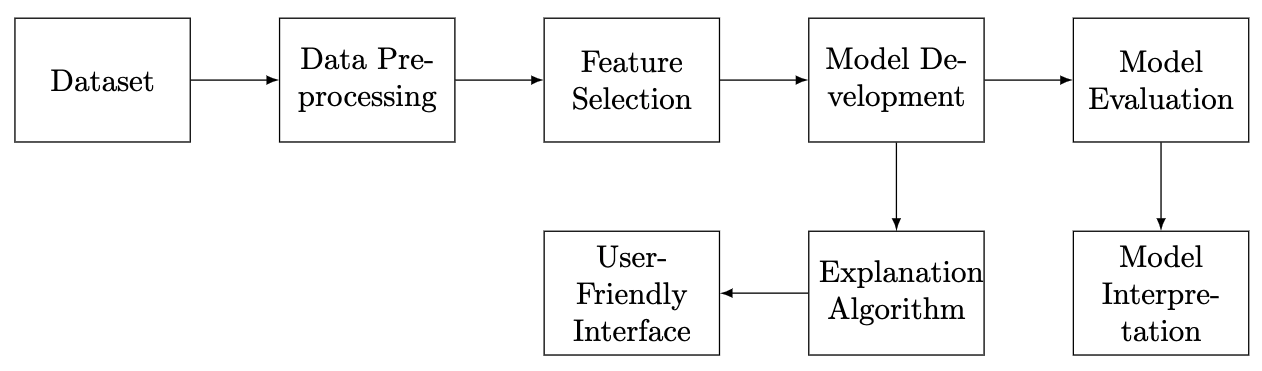

A systematic approach will be employed to develop a reliable predictive model. This includes data collection, preprocessing, feature selection, model development, evaluation, and interpretation. Below outlines the methodology and setps.

- Data Collection: We will gather a publicly available dataset containing various health, lifestyle-related or phsical attributes with a focus on lower back pain-related features. The avability of the features depends on the datasets we use.

- Data Preprocessing: The dataset will be cleaned and prepared for analysis. It includs handling missing values, scaling features and encoding categorical variables. It depends on what mechaning learning algorithms are used.

- Feature Selection: To build a robust predictive model, we will employ feature selection techniques to identify the most relevant attributes associated with LBP. It depends on what mechaning learning algorithms are used. When traditional machine learning models are used, hand-crafted features are more important.

- For example, we can use various methods to pick the most important attributes. These methods include finding features highly connected to LBP (correlation-based), eliminating less important features one by one (recursive feature elimination), measuring how much information each feature holds about LBP (mutual information), using L1 regularization for sparsity (Lasso), looking at feature importance in decision trees (Random Forest and XGBoost), employing dimensionality reduction (PCA), using statistical tests (like chi-squared tests), iterative techniques (forward selection, backward elimination), and some algorithms naturally do this during training (L1-regularized linear models and decision trees). The choice depends on our dataset and the models we plan to use. Trying different methods will help us figure out which one works best for our project.

- Model Development: We will experiment with a range of machine learning algorithms. Generally, we will construct three distinct types of models for predicting LBP: interpretable models, deep learning models, and fuzzy models. Interpretable models will provide transparency that allows us to discern the most critical factors influencing LBP. Deep learning models will employ neural-network based architecture to capture complicated data patterns that can enhance prediction accuracy. Fuzzy models will address uncertainties and imprecisions in the data, particularly valuable in real-world LBP prediction scenarios.

- Interpretable Models: For interpretability, we can use Logistic Regression for binary classification, Decision Trees for transparent decision-making, Random Forest to combine trees and pinpoint crucial LBP factors, Linear Regression adapted for classification, and Naive Bayes for simple probability-based predictions.

- Deep Learning Models: We have options like Convolutional Neural Networks for image data, RNNs and LSTMs for sequences, Feedforward Neural Networks for flexibility, and XGBoost/LightGBM for structured data that offer diverse approaches for LBP prediction.

- Fuzzy Models: We can use Fuzzy Logic Systems for dealing with uncertainty, Fuzzy Inference Systems for medical decisions, Fuzzy Cognitive Maps to understand complex interactions, and ANFIS, a hybrid of fuzzy logic and neural networks, for flexibility in uncertain LBP prediction scenarios.

- Model Interpretation and Explanation Algorithm: We will develop a comprehensive approach to model interpretation which utilises both model-specific and model-agnostic explanations, for both interpretable models and models that are inherently less explainable, such as deep learning models. The machine learning models, including Logistic Regression, Decision Trees, Random Forest, as well as Fuzzy Logic Systems, and others, will be scrutinised to identify, e.g., the most significant factors and rationales contributing to the prediction that enables a deeper understanding of the underlying causes. For the models that are not inherently explainable, e.g., Convolutional Neural Networks, we will introduce an Explanation Algorithm step. This additional stage will involve the application of explanation algorithms like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations). These algorithms will generate both model-specific and model-agnostic post hoc interpretability for complex models that ensure the LBP prediction process becomes more transparent and understandable.

- User-Friendly Interface: To make the model accessible to healthcare professionals and individuals, we will develop a user-friendly interface that presents predictions and interpretable explanations. It serves as the gateway for users to input relevant data, receive predictions regarding LBP, and gain insights into the reasoning behind these predictions. We focus on providing a straightforward and intuitive experience that make it accessible to users with varying levels of technical expertise. By presenting not only predictions but also interpretable explanations for these predictions, we aim to allow users with a clear understanding of why a particular LBP prediction was made. This transparency enhances user trust and facilitates informed decision-making in the context of LBP and offers valuable insights for healthcare practitioners and individuals seeking to manage and prevent LBP effectively.

- Model Evaluation: The models will be evaluated using appropriate performance metrics, and their interpretability will be assessed using explainability tools and techniques. In the process of developing our models to predict LBP, an essential phase involves evaluating their performance. We begin by dividing our dataset into training and test sets to ensure the models can perform well on unseen data. Using metrics like accuracy and precision, we measure how effectively each model predicts LBP. Cross-validation helps ensure the models are robust, and their consistency is assessed across different data splits. Depending on the type of model, we examine feature importance or learning progress, and for fuzzy models, we verify their capacity to handle uncertain data. We compare all our models to determine the most suitable one for real-world LBP prediction that helps us make an informed selection.

Datasets:

- Lower Back Pain Symptoms Dataset, https://www.kaggle.com/datasets/sammy123/lower-back-pain-symptoms-dataset/data

- Lower Back Pain Data, https://www.kaggle.com/code/shrutimechlearn/lower-back-pain-data-87-highest-acc-detailed/notebook

- An Exploratory Data Analysis on Lower Back Pain, https://towardsdatascience.com/an-exploratory-data-analysis-on-lower-back-pain-6283d0b0123

- The NIH Minimal Dataset for Chronic Low Back Pain, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6791505/

- Dataset for the performance of 15 lumbar movement control tests in nonspecific chronic low back pain, https://www.sciencedirect.com/science/article/pii/S2352340922002748#tbl0001

- Vertebral Column, https://archive.ics.uci.edu/dataset/212/vertebral+column

References:

-

CS231n: Convolutional Neural Networks for Visual Recognition

-

A Beginner's Guide To Understanding Convolutional Neural Networks

- "Why Should I Trust You?": Explaining the Predictions of Any Classifier, https://arxiv.org/abs/1602.04938

- https://github.com/marcotcr/lime

- A Unified Approach to Interpreting Model Predictions, https://arxiv.org/abs/1705.07874

- https://github.com/slundberg/shap

- Interpretable Machine Learning A Guide for Making Black Box Models Explainable, https://christophm.github.io/interpretable-ml-book/

- A Survey on Explainable Artificial Intelligence (XAI): Toward Medical XAI, https://ieeexplore.ieee.org/document/9233366

- InDepth: Layer-Wise Relevance Propagation, https://towardsdatascience.com/indepth-layer-wise-relevance-propagation-340f95deb1ea

- Layer-Wise Relevance Propagation: An Overview, https://link.springer.com/chapter/10.1007/978-3-030-28954-6_10 (The book can be downloaded through Institional Login (Access via your institution, choose King's College London))

- Metrics for Explainable AI: Challenges and Prospects, https://arxiv.org/abs/1812.04608

- Evaluating the Quality of Machine Learning Explanations: A Survey on Methods and Metrics, https://www.mdpi.com/2079-9292/10/5/593/pdf

- Explaining Artificial Intelligence Demos, https://lrpserver.hhi.fraunhofer.de/

- Explaining Deep Learning Models for Structured Data using Layer-Wise Relevance Propagation, https://arxiv.org/abs/2011.13429

- Layer-Wise Relevance Propagation for Explaining Deep Neural Network Decisions in MRI-Based Alzheimer's Disease Classification, https://www.frontiersin.org/articles/10.3389/fnagi.2019.00194/full

- Explaining Deep Learning Models Through Rule-Based Approximation and Visualization, https://ieeexplore.ieee.org/document/9107404

- Hoy, Damian, Christopher Bain, Gail Williams, Lyn March, Peter Brooks, Fiona Blyth, Anthony Woolf, Theo Vos, and Rachelle Buchbinder. 2012. “A Systematic Review of the Global Prevalence of Low Back Pain.” Arthritis & Rheumatism 64 (6): 2028–37.

- Hodges, Paul W., Jacek Cholewicki, and Jaap H. Van Dieën, eds. "Spinal control: the rehabilitation of back pain: state of the art and science." (2013).

- Downie, Aron, Christopher M. Williams, Nicholas Henschke, Mark J. Hancock, Raymond WJG Ostelo, Henrica CW De Vet, Petra Macaskill et al. "Red flags to screen for malignancy and fracture in patients with low back pain: systematic review." Bmj 347 (2013).

- Hartvigsen, Jan, Mark J. Hancock, Alice Kongsted, Quinette Louw, Manuela L. Ferreira, Stéphane Genevay, Damian Hoy et al. "What low back pain is and why we need to pay attention." The Lancet 391, no. 10137 (2018): 2356-2367.

Skills: Data analysis, programming, machine learning