We are interested in how to control a robotic finger based on the haptic feedback to allow it explore an object’s surface with a controllable normal force, travel direction and speed. We developed adaptive control mehod based on haptic-servoing (the action of the finger based only on the local contact information, such as tangential force, surface shape, etc.) The algorithm allow a finger to follow an unknown object surface smoothly and adaptively like human does, without the visual feedback or pre-registraion.

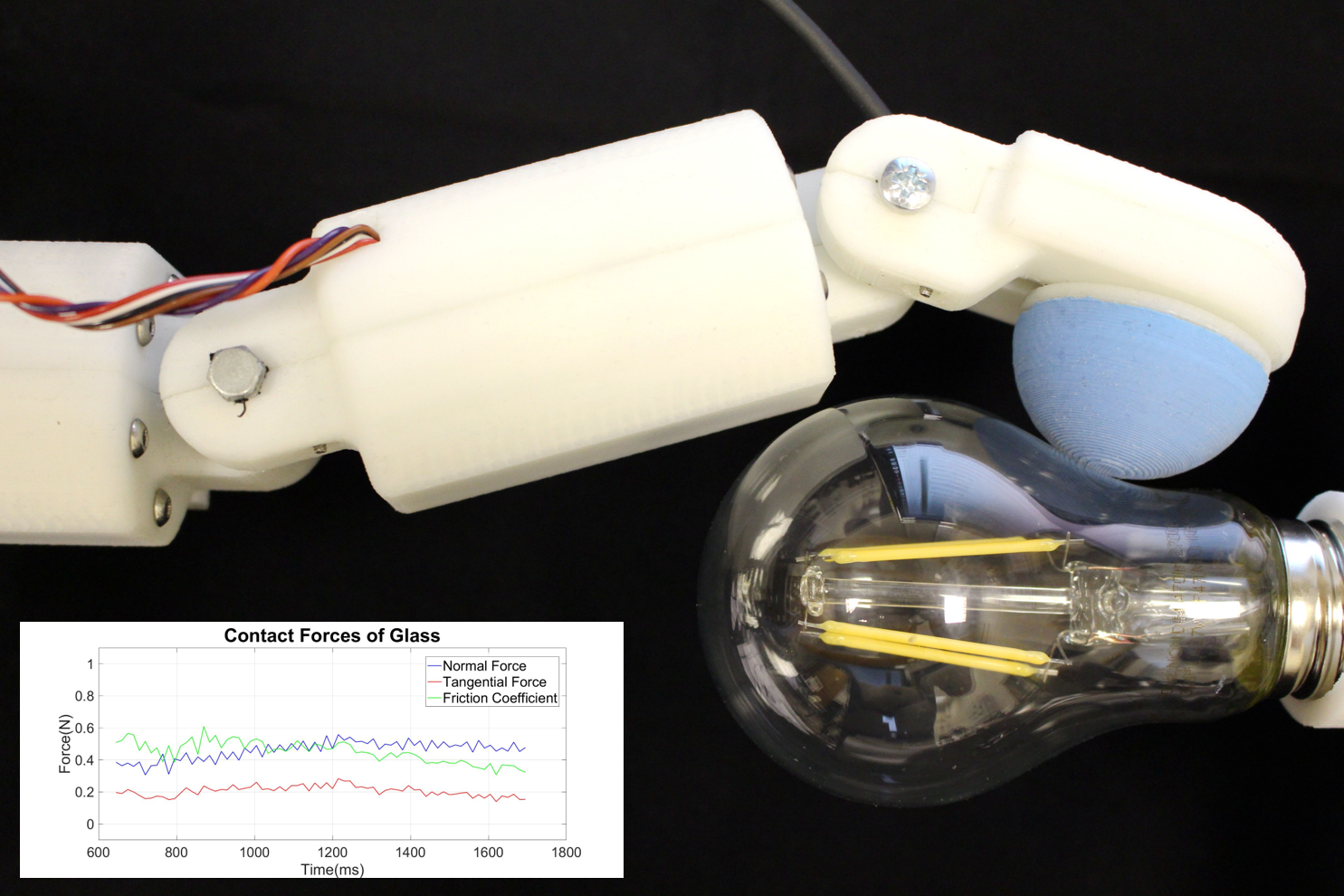

Surface exploration of an bulb

The video shows that a finger is controlled to lightly follow the surface of a light bulb. The shape and position of the bulb is unknown to the controller, the action of the finger is purely decided by instantaneous haptic sensations.

J. Back, J. Bimbo, Y. Noh, L. Seneviratne, K. Althoefer, and H. Liu, "Control a contact sensing finger for surface haptic exploration," 2014 IEEE International Conference on Robotics and Automation (ICRA), pp. 2736-2741, IEEE, May. 2014.

We developed a contact sensing algorithm for the fingertip which is equipped with a 6-axis force/torque sensor and covered with a deformable rubber skin. The algortihm can estimate contact information occuring on the finger, including the contact location on the fingertip, the direction and the magnitude of the friction and normal forces, the local torque generated at the surface, at high speed (158-242 Hz) and with high precision.

H Liu, KC Nguyen, V Perdereau, J Back, J Bimbo, M Godden, LD Seneviratne, K Althoefer, "Finger Contact Sensing and the Application in Dexterous Hand Manipulation", Autonomous Robots, accepted, 2015. (PDF)

Once the object was grasped, and in order to assess the stability of the grasp, the object's pose - position and orientation - needs to be known accurately. While 3D vision is very reliable when the object is on top of a table, once it is in-hand, the occlusions created by the robot hand itself causes the performance of the tracker to drop significantly. To tackle this problem, tactile sensing was used to correct the estimate by finding a pose that matched the sensing data.

J Bimbo, P Kormushev, K Althoefer, H Liu, "Global Estimation of an Object's Pose Using Tactile Sensing", Advanced Robotics, vol.29, no.5, 363-374, 2015 (PDF)

The sense of touch plays an irreplaceable role when we humans explore the ambient world, especially when vision is occluded. In these tasks, object recognition is performed with identification of their geometric (i.e. shapes and poses) and physical properties (i.e. temperature, stiffness and material) with our haptic perception system. We are working on extract this information from readings of tactile array sensors and combine them with inputs from vision.

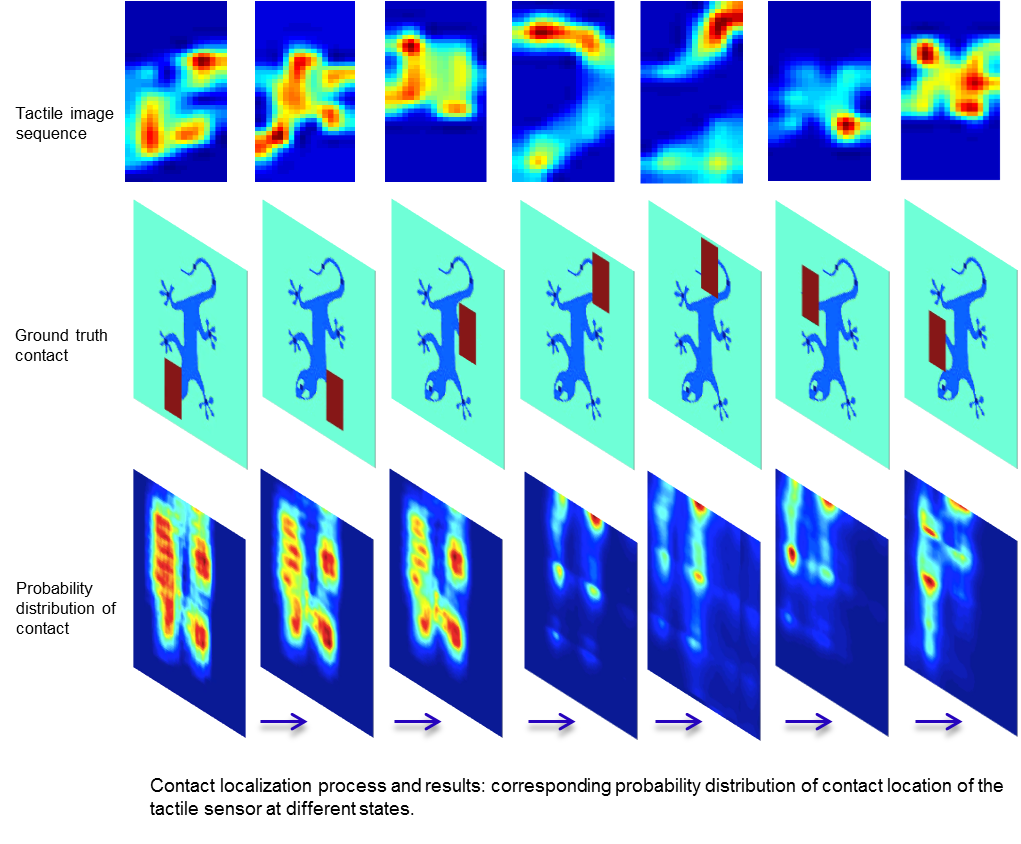

To cope with the unknown object movement, we propose a new Tactile-SIFT descriptor to extract features in view of gradients in the tactile image to represent objects, to allow the features being invariant to object translation and rotation. Intuitively, there are some correspondences, e.g., prominent features, between visual and tactile object identification. To apply it in robotics, we propose to localize tactile readings in visual images by sharing same sets of feature descriptors through two sensing modalities. It is then treated as a probabilistic estimation problem solved in a framework of recursive Bayesian filtering.

S Luo, W Mou, K Althoefer, H Liu, Novel Tactile-SIFT Descriptor for Object Shape Recognition, IEEE Sensors Journal, article in press, 2015. (PDF) S Luo, W Mou, K Althoefer, H Liu, "Localizing the Object Contact through Matching Tactile Features with Visual Map", IEEE International Conference on Robotics and Automation (ICRA), in press, 2015. (PDF)

TSB -Technology Strategy Board, Liu, H. 2013-14, In collaboration with Shadow Robot Company

While research in dexterous grasping and manipulation has been a very active field of research within academia, there has not been a great deal of successful translation into commercial applications. One of the obstacles for successful integration of dexterous manipulation into a manufacturing setting is the trade-off created by the high degree of dexterity required to achieve the desired flexibility versus the level of complexity that the extra degrees of freedom add to the system. The goal of the GSC project was to endow a dexterous robot hand with the ability to robustly grasp and manipulate objects. Multiple aspects of this task were investigated, such as finding sensing requirements, implementing a vision system to identify and track an object and developing methods to assess and ensure the stability of a grasped object. The applications of the developed system range from the ability to pick and accurately place objects from a far wider range of parts than previously possible using a simple gripper, to use the robot hand as a "third hand" during assembly tasks, and to enable future concepts in tele-manipulation.

The GSC project is adding new capabilities to the Shadow Dexterous Hands. Software development in GSC now provides Shadow Hands with the following capabilities out of the box:

Automatic grasping for most common objects using 3D vision: you can redeploy on new products in minutes

Performance can be improved on critical objects with simple on-line training: even complex objects can be handled easily

Robust on-line grasp stabilisation: objects won't slip out or be dislodged

On-line pose estimation speeds grasping and manipulation tasks

Intelligence Inside: no operator training needed to set up the system.

All these functions are seamlessly integrated into the Shadow Hand ROS stack. See GSC project also on Shadow's website