Dr Oya Celiktutan

Senior Lecturer (Associate Professor)

I am a Senior Lecturer (Associate Professor) in the Centre for Robotics Research (CoRe) Group within the Department of Engineering, King's College London, United Kingdom since 2022, where I am the Head of Social AI and Robotics (SAIR) Lab. I obtained my PhD degree in Electrical and Electronics Engineering from Bogazici University, Turkey, in collaboration with the National Institute of Applied Sciences of Lyon, France. After my PhD, I spent several years as a postdoctoral researcher in the Personal Robotics Lab, Imperial College London; Graphics and Interaction Research Group (Rainbow), University of Cambridge; and Multimedia and Vision Research Group, Queen Mary University of London. In 2018, I joined King's College London as a Lecturer in Robotics, which has the best location in central London -- see my office view.

My research interest is machine learning to develop socially aware systems capable of autonomous interaction with humans. This encompasses tackling challenges in multimodal perception, understanding and forecasting human behaviour, as well as advancing navigation, manipulation, and human-robot interaction. So far, my team's research has been supported by the EPSRC, The Royal Society, Innovate UK, EU Horizon, and industrial collaborations including Toyota Motor Europe, SoftBank Robotics Europe, and NVDIA. Here is a short article about SAIR.

Highlights of our research are:

-

To contact me, please see below:

Robot Navigation

Conversational group detection · socially-aware navigation in human environments

Continual Learning

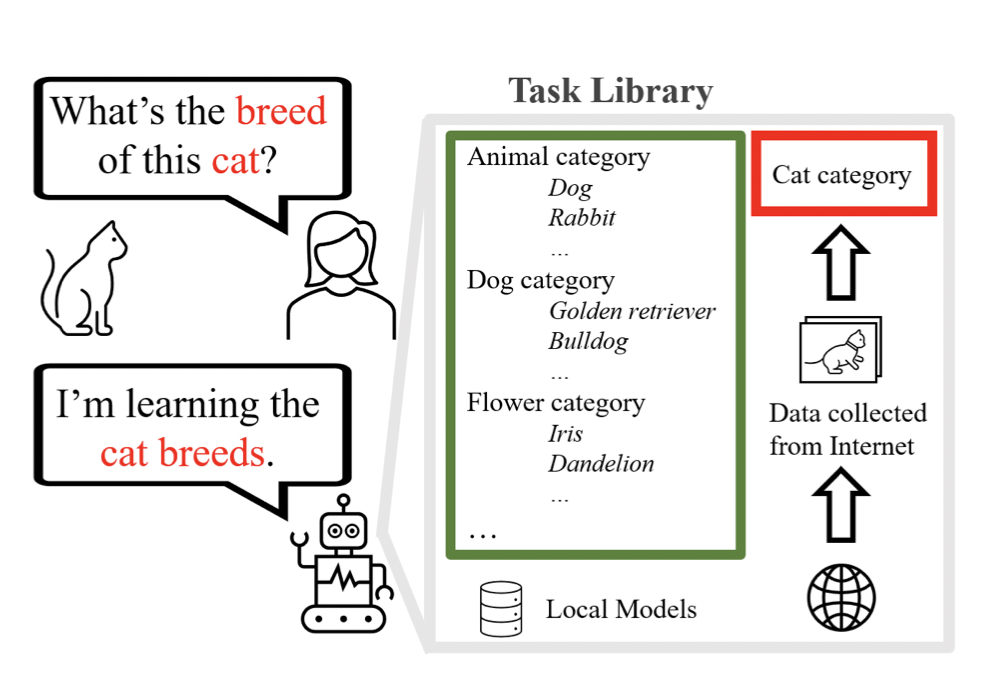

Image classification · class and task incremental learning

Reinforcement Learning

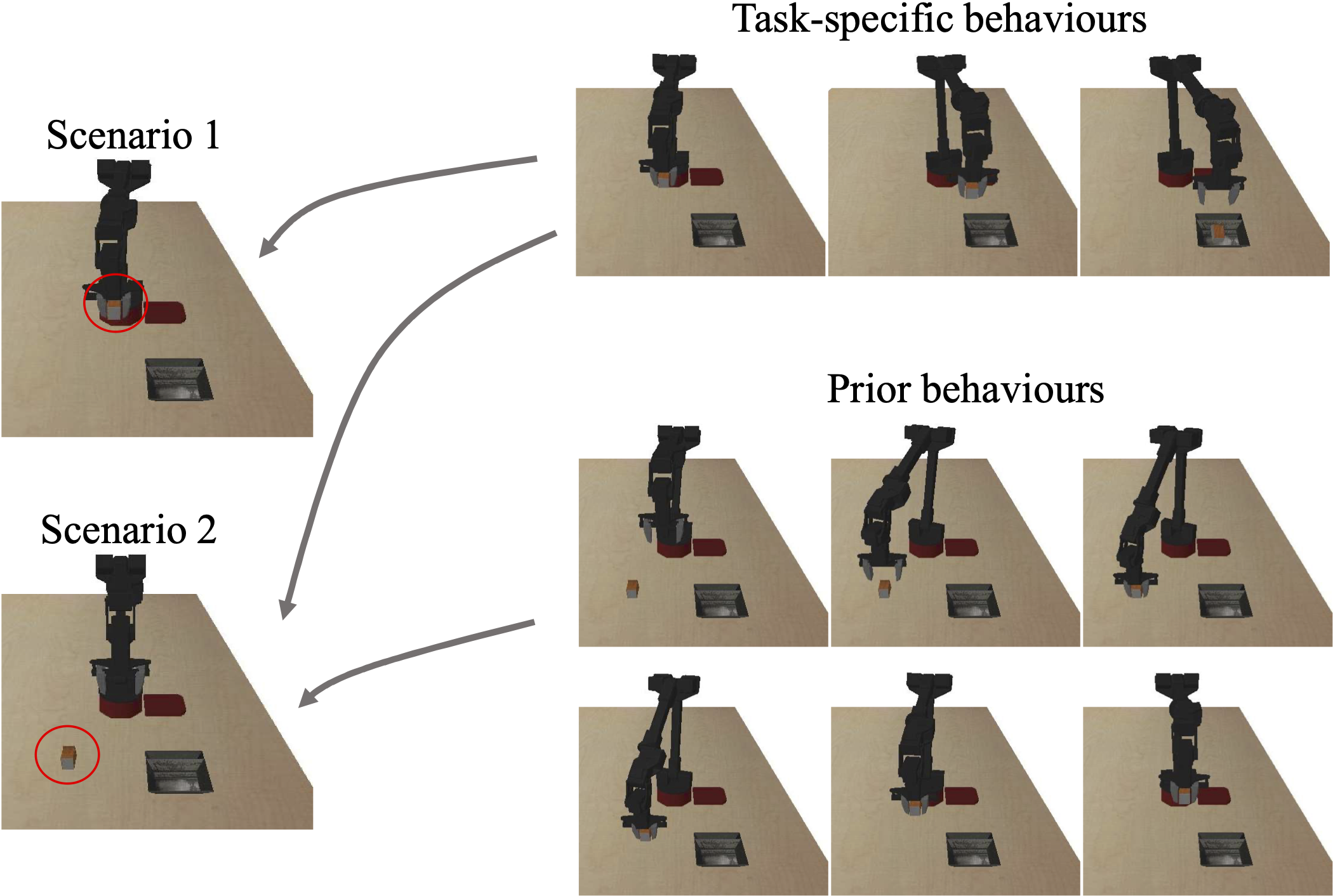

Imitation learning · adaption to new conditions · agent behaviour modelling

Responsible Robotics

Robotic explanation generation · algorithmic fairness in human behaviour analysis

User Modelling

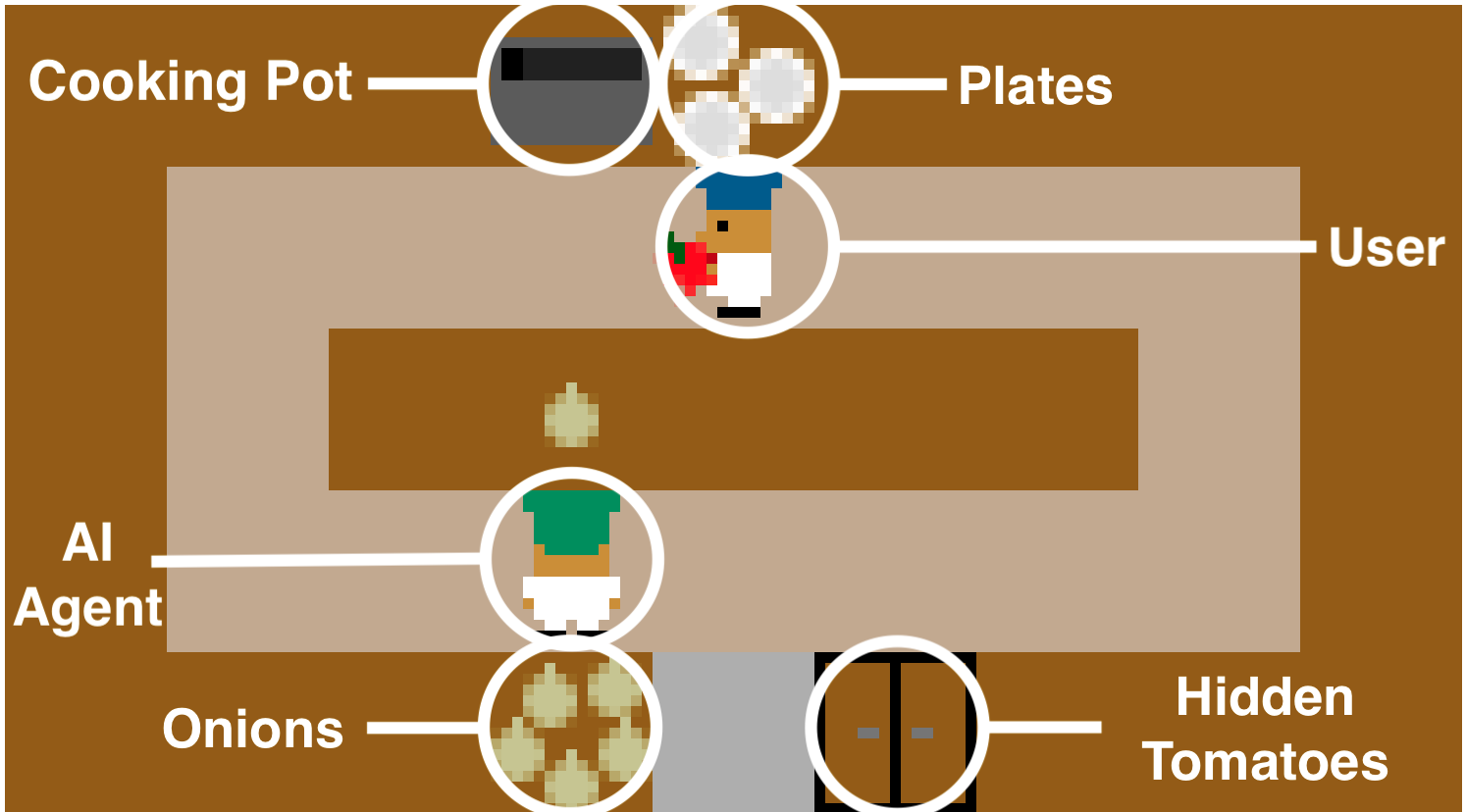

Socially intelligent agents · mental state estimation · eye gaze estimation

Nonverval Behaviour Generation

Imitating human movements · gesture synthesis · multimodal interaction

Multiparty Interaction

Personality and engagement recognition · multimodal data fusion

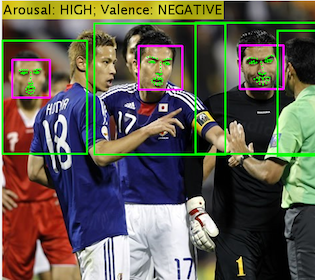

Personality/Emotion Recognition

Nonverbal behaviour analysis · group emotion recognition

Human Activity Recognition

Hyper-graph matching · Hidden Markov Models · HARL dataset

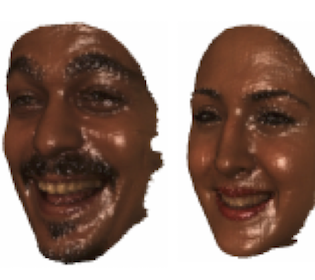

Face Analysis

Face landmarking · Bosphrous 2D/3D face dataset

Camera Source Identification

Image forensics features · sequential feature selection

Projects

2024-2025 (Role: PI)

Social Robots as Psychosocial Support Companions for Enhanced Quality of Life in Paediatric Palliative Care

King's Together Research Award

This project aims to explore the application and practicalities of embedding Socially Assistive Robots (SARs) within Paediatric Palliative Care by first understanding the mental-health benefits that can be derived from synthetic companionship with SARs and discover its potential towards enhancing the quality-of-life for sick children.

2022-2025 (Role: co-I)

SERMAS - Socially Acceptable Extended Reality Systems

EU/Innovate UK

SERMAS aims to develop innovative, formal and systematic methodologies and technologies to model, develop, analyse, test and user-study socially acceptable XR systems. [More info]

2022-2023 (Role: PI)

CL-HRI - Continual Learning Towards Open World Human-Robot Interaction

The Royal Society Seed Fund

The vision of the CL-HRI project is to 1) develop scalable methods that can advance the current state of continual learning in human-centric computer vision tasks; and 2) integrate the developed techniques with real robots to enable them to constantly adapt and automatically exploit new information for generating appropriate interactive behaviours.

2021-2023 (Role: PI)

LISI - Learning to Imitate Social Interaction

EPSRC New Investigator Award

The LISI project aims to set the basis for the next generation of robots that will be able to learn how to act in concert with humans by watching human-human interactions.

2019-2020 (Role: Joint PI)

Wiring the Smart City

King's Together Multi & Interdisciplinary Research Award

An exciting multidisciplinary project that is on a mission to build a 5G connected smart platform for public safety and health in large urban areas such as London. The team is distributed across three King’s faculties, namely, NMS, IoPPN, SSPP, and five research centres including CTR, CoRe, CTI, CUSP, and SLaM. More info will follow - stay tuned!

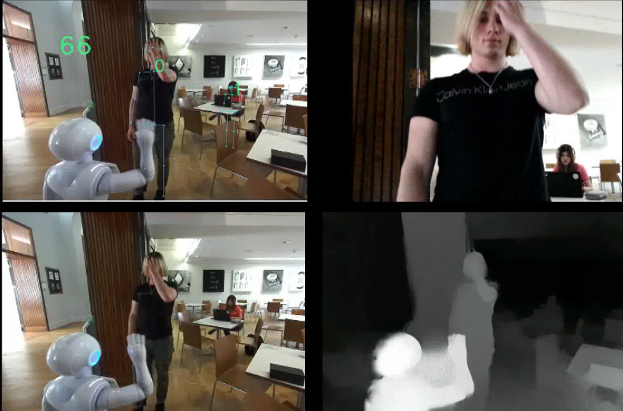

2017-2018 (Role: Post-doc)

Personal Assistant for Healthy Lifestyle (PAL)

Horizon 2020

PAL project aimed to develop (mobile) health applications for the purpose of assisting diabetic children through a social robot and its mobile virtual avatar. My role involved developing vision-based methods for estimating user’s mental states to enable system personalisation mechanisms

2014-2017 (Role: Post-doc)

Being There

EPSRC

This project was a unique collaboration between researchers and artists to enable people to access to public spaces through cutting-edge robotic telepresence systems. My role involved developing (multimodal) methods to automatically model nonverbal cues during human-robot interaction.

2013-2014 (Role: Post-doc)

MAPTRAITS

EPSRC

MAPTRAITS aimed at developing natural, human-like virtual agents that can not only sense their users, but also adapt their own personalities to their users' personalities. My role involved devising a real-time personality prediction system.

Teaching

Sensing and Perception

This module introduces learners to the design of sensing and perception algorithms for robotic and autonomous systems. Learners will implement a range of algorithms used by a robot to sense and interpret its surroundings, with sensors such a 2D and 3D cameras, LIDAR and radar, and test these algorithms in design applications. The module also covers advanced techniques to fuse measurements from proprioceptive and exteroceptive sensors for improved state estimation.

Sensors and Actuators

In the implementation of mechatronic and other control systems, a key component is the integration of computational brainpower with sensing (measurement of unknown signals or parameters) and actuation (affecting the surrounding environment). During this module, I teach the Sensors part and introduce advanced concepts in sensing for mechatronic and other relevant systems including estimations of parameters from measurements and analogue sensors.

News

Open Positions

- I am happy to support strong candidates apply for a PhD. Please see funding opportunites at King's. Desirable criteria include (1) experience at least in one of the following areas: computer vision, machine learning, human-robot interaction; (2) at least one paper (either published, under review or to be submitted, or a project report), demonstrating experience in the area(s) above; and (3) strong programming skills, particularly, Python/C++. If you are interested, just drop me an email!

Highlights

- I gave a talk at the 3rd NAVER LABS Europe International Workshop on AI for Robotics (2023). Check out the recordings.

Selected Activities

- General Chair of ACII 2024, DC Chair of FG 2024, and Publication Chair of ICMI 2024.

- Area Chair (e.g., MM, ICMI, and BMVC), and Reviewer (e.g., CVPR, ECCV, ICCV, NeurIPS, WACV, HRI, and ICRA).

- Associate Editor of the Journal of The Franklin Institute, and Review Editor for Human-Robot Interaction in Frontiers in AI and Robotics.

- Co-organiser of MASSXR Workshop at VR 2024, and SARER Insight Session at ERF 2024.